Chat with Database

Live ChatGPT Streamlit Lanchain Database Chat App

We are building a chat bot which will take a question in a natural language & then query the database i.e., convert the question into a database query. Give back results of the query in plain English.

The App is built using

- Streamlit for the front end i.e., UI

- ChatGPT – As Large Language Model, which will convert the Question posed to a Database query

- Langchain – Which connects Streamlit with ChatGPT

Its very simple to build and its all built using python , so there is no need to learn a new programming language

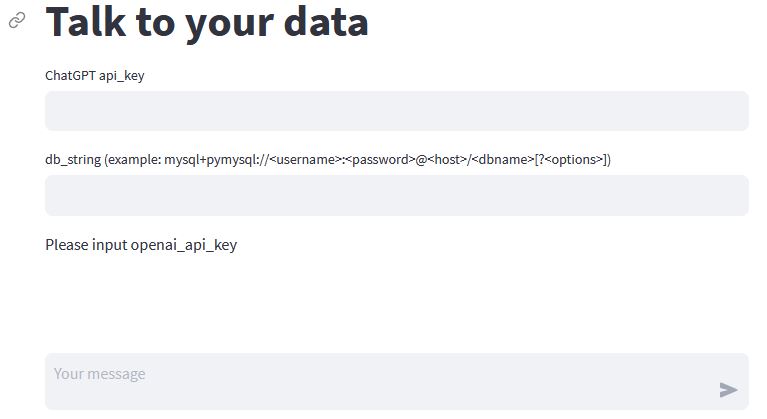

Final App’s Look & Feel:

Steps to Build the App

-

- Install langchain and streamlit

The best way to install these 2 packages is by creating a venv or conda environment , this isolates the packages

- Install langchain and streamlit

pip install langchain streamlit

2) Data Connection String for Database

Langchain uses SQLAlchemy to connect to your database , so any database compatable to SQLAlchemy will do. You just need the data connection string so that langchain can connect to the database. Below is example for connecting to a MySQL database

mysql+pymysql://<username>:<password>@<host>/<dbname>[?<options>]

3) Set up a streamlit app

You need a little bit of code for the front end. However, its all very easy. First, you need to import streamlit & then use simple code to make the UI

4) Langchain SQL Agent – talks to your Database

Sql agent is used to explore your database. This agent is powered by a LLM – openai or any other.

SQL chain can also be used instead however its more of a manual process

5) Hook up langchain and streamlit

StreamlitCallbackHandler is what makes langchain and streamlit talk to each other. Each time lanchain agent responds it calls a function to update the UI.

Below is the code for this project:

import SQLDatabase from langchain.llms

import OpenAI from langchain.agents

import AgentType, initialize_agent, load_tools from langchain.callbacks

import StreamlitCallbackHandler from langchain.agents

import create_sql_agent from langchain.agents.agent_toolkits

import SQLDatabaseToolkit

import streamlit as st

st.title("Talk to your data")

api_key = st.text_input("api_key")

db_string = st.text_input("db_string")

if api_key:

db = SQLDatabase.from_uri(db_string)

toolkit = SQLDatabaseToolkit(db=db, llm=OpenAI(temperature=0, openai_api_key=api_key))

agent_executor = create_sql_agent( llm=OpenAI(temperature=0, streaming=True, openai_api_key=api_key), toolkit=toolkit, verbose=True, agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

)

else:

st.write("Please input openai_api_key")

if prompt := st.chat_input():

st.chat_message("user").write(prompt)

with st.chat_message("assistant"):

st_callback = StreamlitCallbackHandler(st.container())

response = agent_executor.run(prompt, callbacks=[st_callback])

st.write(response)

save the file as app.py & run it with:

streamlit run app.py

You can either deploy the application on Streamlit cloud or on your own server. Below is the dockerfile to deploy it on your own server:

FROM python:3.11

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY ./app .

CMD ["streamlit", "run", "index.py"]

You now have a working chatbot which works with most of the databases

Notes about Limitations:

- 5000 tokens per request – Openai has this upper limit, so if you have a large data schema to upload then it will fail

- Paid – Openai is a paid service or you have to host your own LLM on a GPU

- ChatGPT – This is a general purpose LLM which is trained to do daily tasks. You might need to use a LLM which is more focused on generating SQL queries. Hugging face has LLMs which are specifically trained for generating SQL queries

So use this code as a starting point to explore getting responses from the database. You will need to refine it further to make it more useful for your particular use case , given the above limitations

You can download the code from here on GitHub