Mounting / Storing Files in Your Google Drive

The below code imports the drive library and mounts your Google Drive as a VM local drive. You can access to your Drive files

using this path “/content/gdrive/My Drive/”

Note: You can only mount same Drive on which your Google Colab notebook is saved

from google.colab import drive

drive.mount('/content/gdrive')

Symbolic link to mounted Google Drive

A symbolic link is needed as Google Colab is not good at handling space like in “My Drive”. You can use the symbolic link “/content/yoloV8_fruit_veggies_detection” to access files in the directory

Note: You need to create the directory named yoloV8_fruit_veggies_detection in your Google Drive

!ln -s "/content/gdrive/My Drive/yoloV8_fruit_veggies_detection" /content/yoloV8_fruit_veggies_detection

Unlink Symbolic link

Just in case u want to remove a symbolic link

!unlink /content/yoloV8_fruit_veggies_detection

Check the type of GPU

!nvidia-smi

Get Present Directory

Get the Current working dir & set the HOME variable. The HOME variable will be used to access files stored locally on Colab temporary allocated storage space

import os

HOME = os.getcwd()

print(HOME)

Install YOLOv8

Install ultralytics which is the package containing YOLOv8 & Check if YOLOv8 is working as expected

!pip install ultralytics==8.0.20

from IPython import display

display.clear_output()

import ultralytics

ultralytics.checks()

Check configuration file

Configuration file is where you define all parameters which model is going use for training. Examples of these are:

!cat /usr/local/lib/python3.9/dist-packages/ultralytics/yolo/cfg/default.yaml

Import Packages needed for Training

Import Yolo from the ultralytics package.

from ultralytics import YOLO

from IPython.display import display, Image

YoloV8 Command Line Interface (CLI)

Yolo CLI interface is the best way to get started with training, validating or inferencing i.e., if you dont have to make any modifications to the code. If you want more information you can goto Ultralytics YOLO Docs

yolo task=detect mode=train model=yolov8n.yaml args...

classify predict yolov8n-cls.yaml args...

segment val yolov8n-seg.yaml args...

export yolov8n.pt format=onnx args...

Inference with Pre-trained COCO Model

Yolo mode=predict automatically downloads models from latest YOLOv8 release & saved the result of prediction to runs/predict.

%cd {HOME}

!yolo task=detect mode=predict model=yolov8n.pt conf=0.25 source='https://media.roboflow.com/notebooks/examples/dog.jpeg' save=True

Displaying Predicted Image

Displays the predicted image in the notebook along with BBoxes

%cd {HOME}

Image(filename='runs/detect/predict/dog.jpeg', height=600)

Downloading Dataset from Roboflow

We’ll be downloading a open source dataset from Roboflow to train the model. if you want to inspect the dataset you can download it from this link: https://universe.roboflow.com/bohni-tech/fruits-and-vegi

!mkdir {HOME}/datasets

%cd {HOME}/datasets

!pip install roboflow --quiet

from roboflow import Roboflow

rf = Roboflow(api_key="REPLACE_WITH_UR_API_FROM_ROBOFLOW")

project = rf.workspace("bohni-tech").project("fruits-and-vegi")

dataset = project.version(13).download("yolov8")

Training – Changing Directory to Google Drive

This is to start executing from Gdrive dir, since model is saved in dir where execution starts. There is presently no way to specify a custom path to a directory to save the trained YoloV8 model. So, best method is to start model execution from the GDrive in which you want the model to be saved

I have created a subdirectory named train_march_23_2023 in my Google Drive in which i intend to save the model. You change the name & create your own sub directory

%cd /content/yoloV8_fruit_veggies_detection/train_march_23_2023

!pwd

Training the model

Starts training the model, the details of parameters are as below:

!yolo task=detect mode=train model=yolov8s.pt data={dataset.location}/data.yaml epochs=3 imgsz=1280 plots=True batch=8

Resuming Training Checkpoint Location

resume_location='/content/yoloV8_fruit_veggies_detection/train_march_23_2023/runs/detect/train7/weights/best.pt'

Resuming Model Training from Checkpoint in Google Colab

!yolo task=detect mode=train model=yolov8s.pt data={dataset.location}/data.yaml epochs=30 imgsz=1280 plots=True batch=8 resume=True model={resume_location}

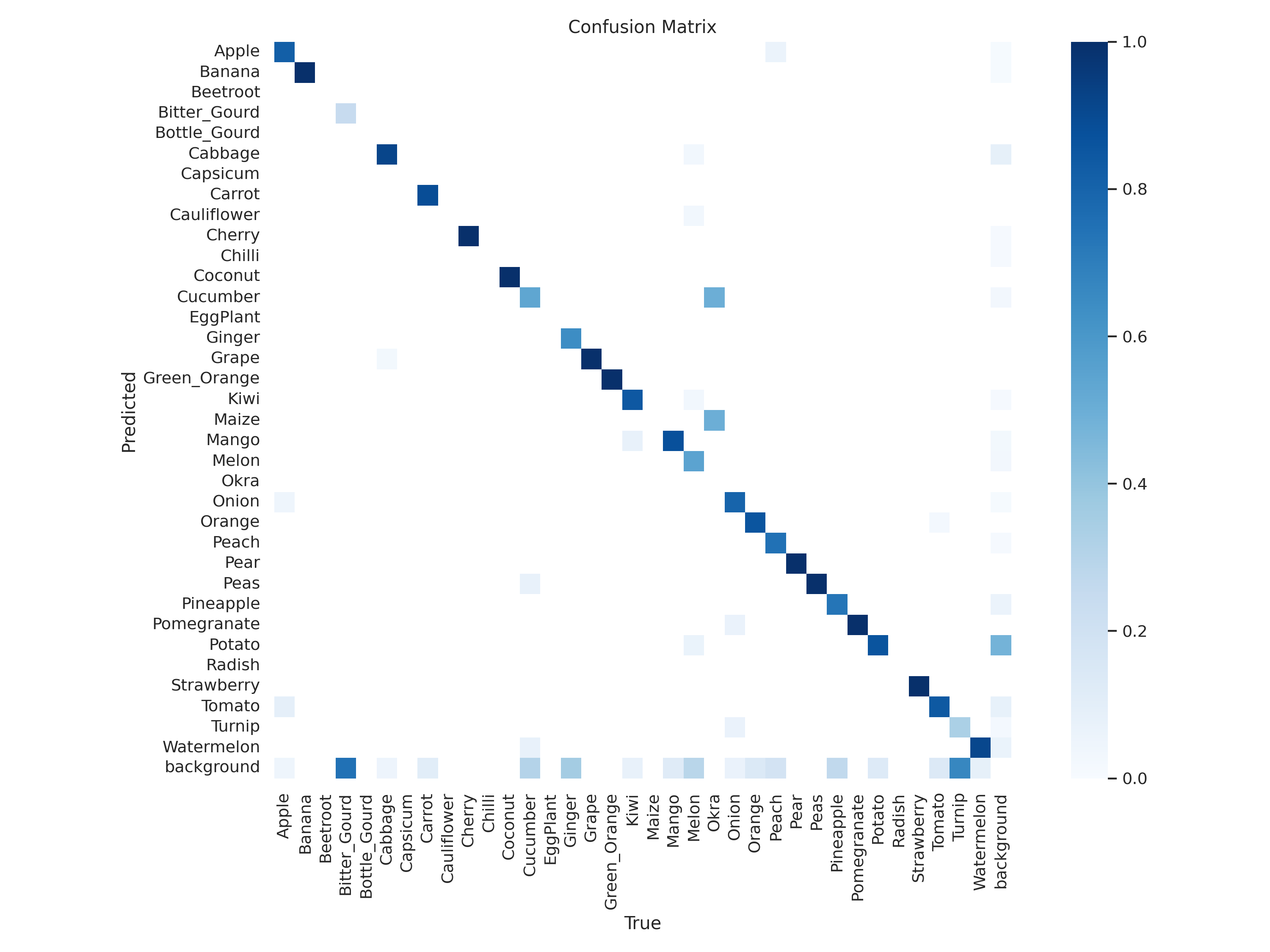

Confusion Matrix

gdrive_path = '/content/yoloV8_fruit_veggies_detection/train_march_23_2023/runs/detect'

Image(filename=f'{gdrive_path}/train5/confusion_matrix.png', width=600)

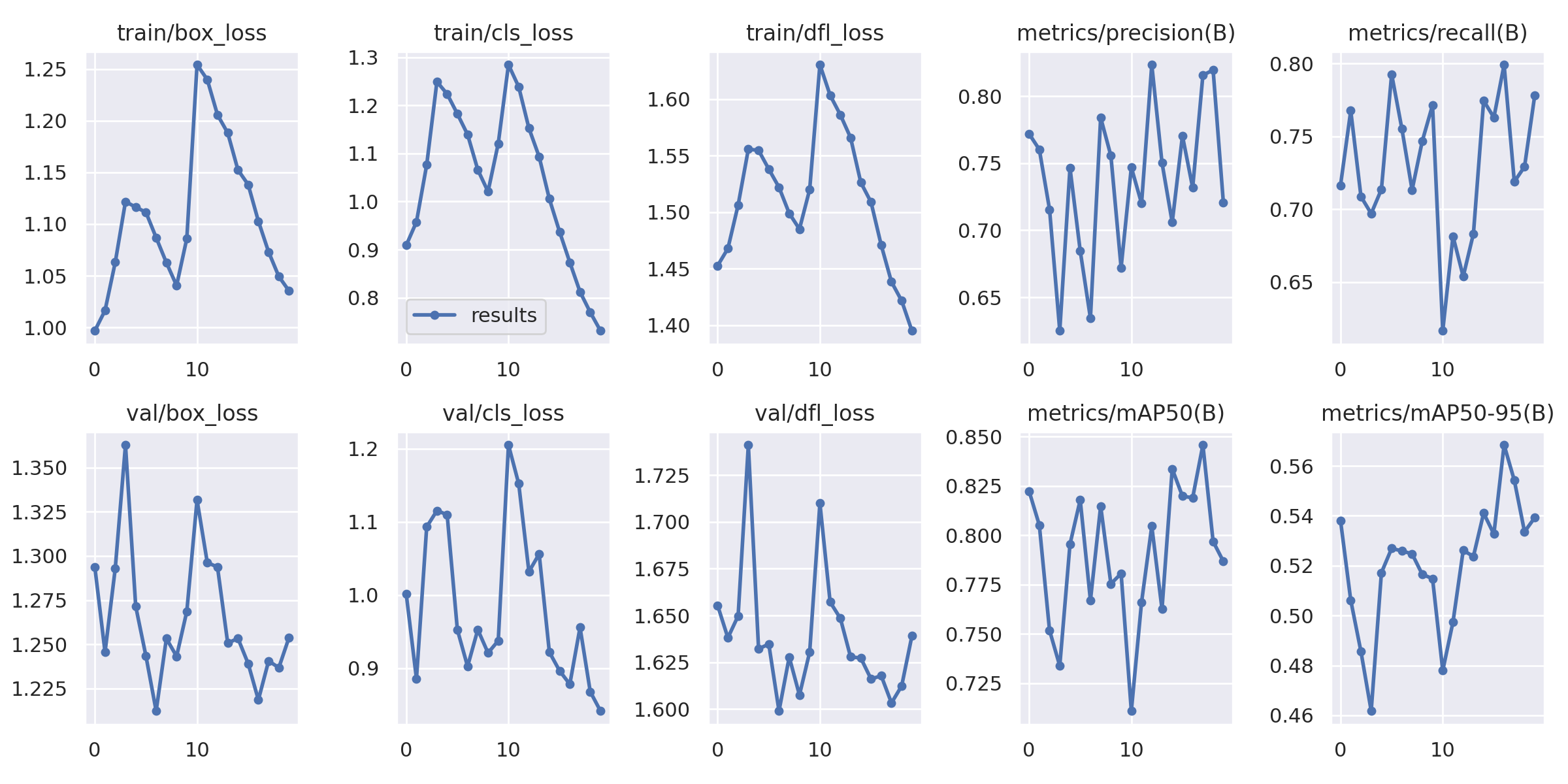

Accuracy Parameters

The image gives the Accuracy measures as listed below, these are used to judge how well the model has been trained

Image(filename=f'{gdrive_path}/train5/results.png', width=600)

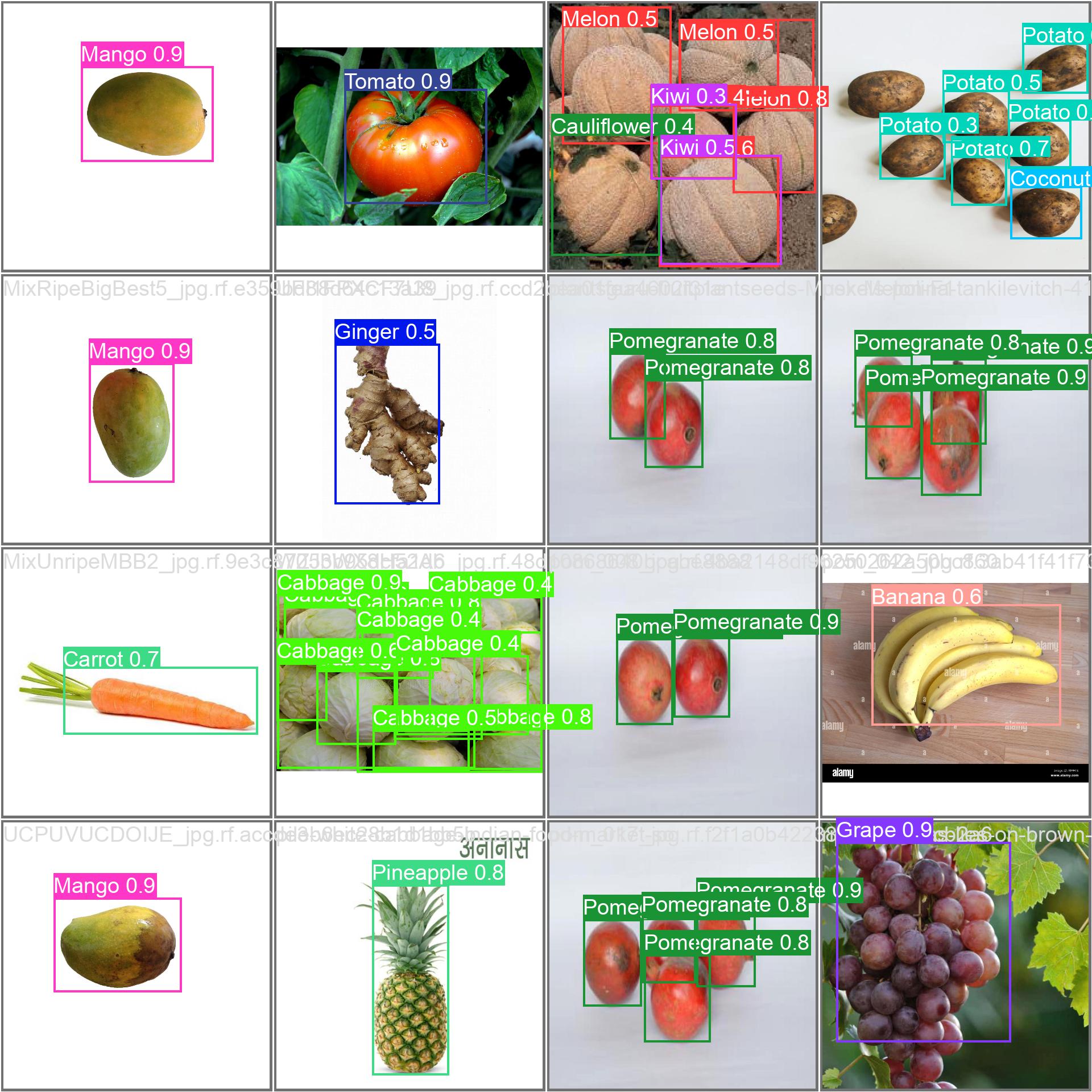

Predict on Test Image

Check out how well the model is trained by predicting on a test image

Image(filename=f'{gdrive_path}/train5/val_batch2_pred.jpg', width=600)

Validate Custom Model

Check how model performs on validation data for different classes for following parameters:

!yolo task=detect mode=val model={gdrive_path}/train5/weights/best.pt data={dataset.location}/data.yaml

Inference with Custom Model

Use the model to inference on the test images & have a look at the inferenced images

!yolo task=detect mode=predict model={gdrive_path}/train5/weights/best.pt conf=0.25 source={dataset.location}/test/images save=True

import glob

from IPython.display import Image, display

for image_path in glob.glob(f'{gdrive_path}/predict/*.jpg’)[3:8]:

display(Image(filename=image_path, width=600))

print(“\n”)